Enabling TensorFlow to Recognize Images via a Mobile Device

What can mobile add to AI?

Things have dramatically changed as half of the Fortune 500 have disappeared since the year of 2000. Just a few years ago, startups and companies would promote artificial intelligence merely as an “extra add-on” to their business models. What we observe today is that you can hardly find a startup, which is not trying to exploit the perks of artificial intelligence to the fullest.

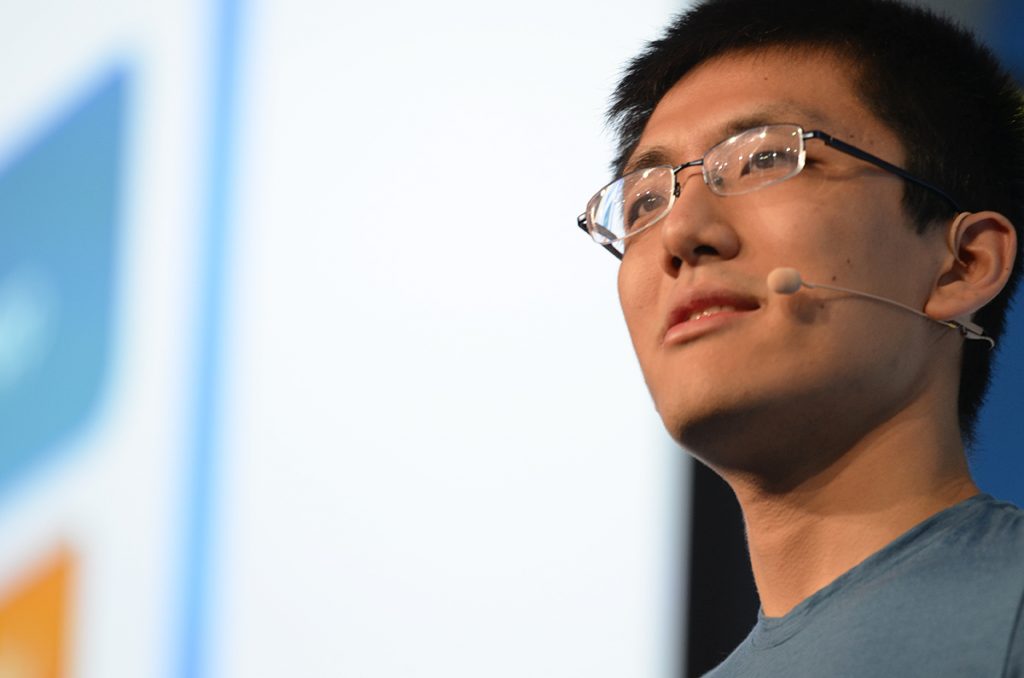

When it comes to machine learning, training a model is still seen as a very compute-intensive action, thus carried out on a server. In his session at Google Cloud Next 2017, Yufeng Guo, a developer advocate for Google, explored whether it is possible to bring machine learning training to mobile, which is considered lower-powered and can’t flex out to multiple virtual machines.

“Artificial intelligence is well-poised to revolutionize how we compute, how we connect, and where we do all these things, and make it ubiquitous.” —Yufeng Guo, Google

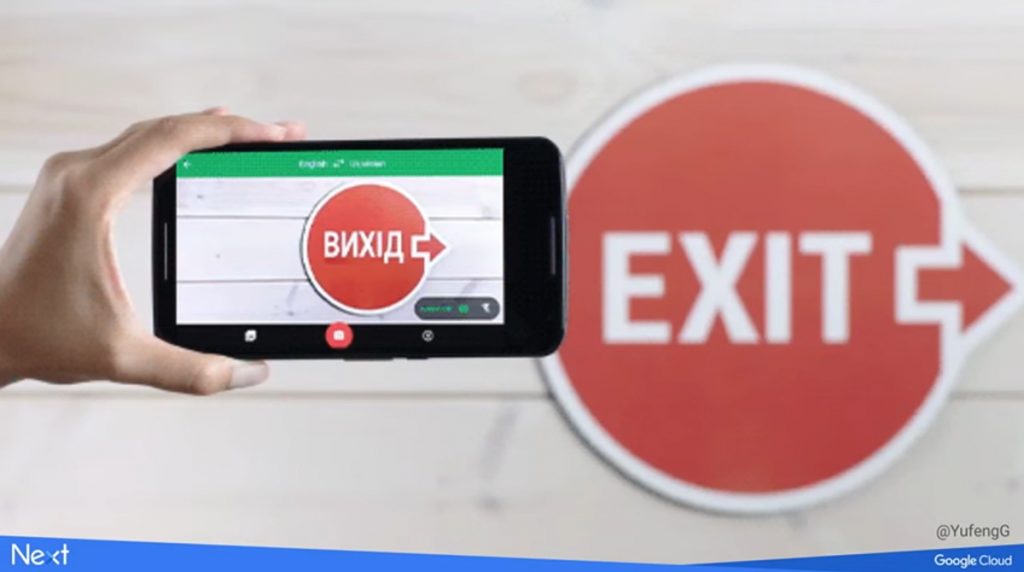

Using mobile to connect to a server for prediction, like a web app or a website do, is all nice. However, enabling prediction—by implementing machine learning—on a physical device itself brings along unsurpassed user experience. A good example is Google Translate, which employs on-board machine learning to overlay the translated text.

Convolutional neural networks behind the scene

Yufeng demonstrated how to do custom image classification, using a model that will “live on the device” with no tedious data collection, labeling, or weeks of training and writing distributed code involved.

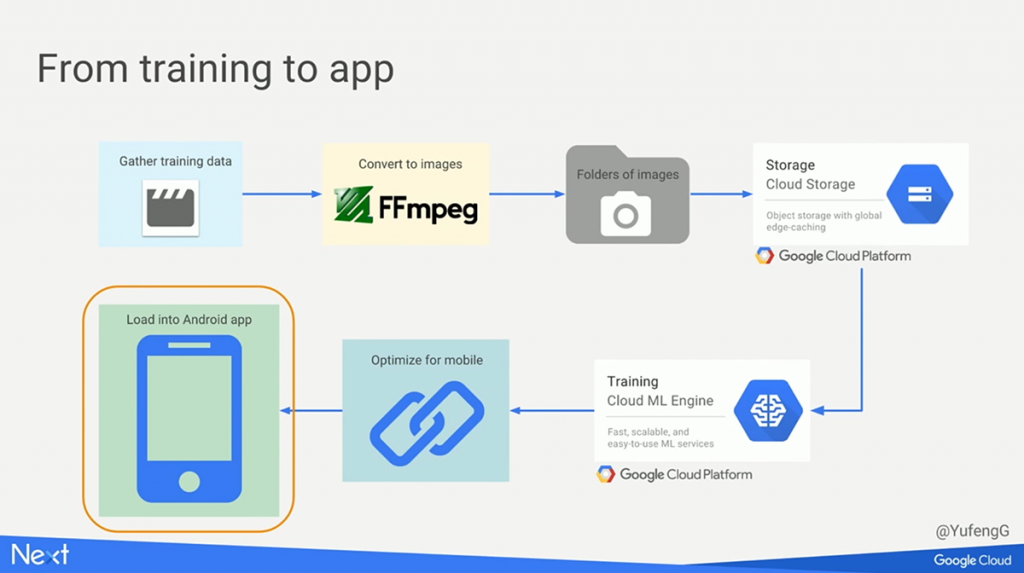

So, what are the steps to go from training to an app?

- Gather training data. Basically, you’ve got to take a video with a smartphone of whatever you want a model to recognize. Then, a video should be split into frames. Since those frames are all batched together, one gets a folderful of images to train on. Yufeng used FFmpeg to convert a video into a bunch of images.

- Upload the images to the cloud. In his case, Yufeng trained data on Cloud ML Engine, while storing pictures in Google Cloud Storage.

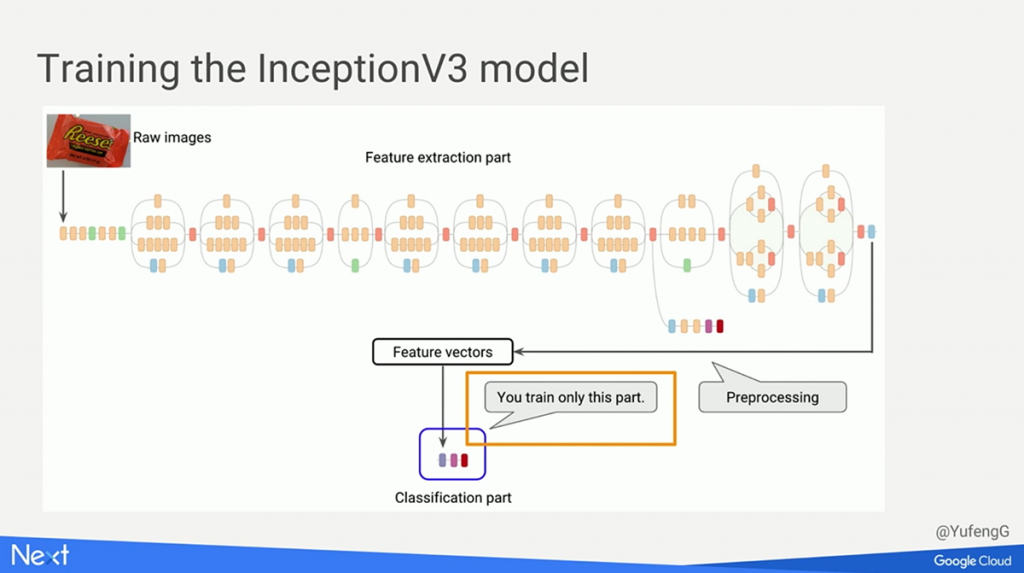

- Kick off the training. Surely, convolutional neural networks are behind the scene. Furthermore, the Inception V3 model—48 layers deep—was applied. (Note: Back in 2011, it was impossible to train a model with more than just four layers deep, while the neural network to win the ImageNet challenge in 2016 comprised 22 layers.) Actually, the training is going to happen only within the last layer. One needs to separate different classes recognized out into buckets, while making all the values within the network “frozen” (i.e., not training them).

Downsizing the model

Then comes the challenge to make it work with mobile, Android in this particular case. The thing is that the libraries used on the Android version of TensorFlow lack certain operations. To overcome the burden of little compute power on mobile, one has to strip down the model. (The output of Yufeng’s experiment is 84 megabytes, which is just too much.)

One may utilize the Graph Transform tool to downsize the model. Thus, you can quantize the space-taking 32-bit values to just 8-bit ones. Needless to highlight that now we’ve got a 20-megabyte model, accuracy still decent thanks to neural networks doing their job.

There are two major options of finally delivering the model to users:

- built in the app (thus making it heavier)

- as a separate file to download

Both options drive certain bargain. The built-in option secures the model, while putting it as a separate file provides flexibility in introducing updates. So, the choice is up to your priorities.

With TensorFlow, it is possible to combine the powers of mobile and machine learning on Android and iOS (do not forget support for Raspberry Pi, GPU, CPU, and TPU).

Want details? Watch the video!

Further reading

- Text Prediction with TensorFlow and Long Short-Term Memory—in Six Steps

- Using Long Short-Term Memory Networks and TensorFlow for Image Captioning

- The Magic Behind Google Translate: Sequence-to-Sequence Models and TensorFlow

- Mobile Devices Are Propelling Industrial IoT Scenarios

About the expert

Yufeng Guo is a developer advocate for the Google Cloud Platform, where he is trying to make machine learning more understandable and usable for all. He is interested in combining IoT devices, big data, and machine learning, while always enthusiastic about learning new technologies.