Golang Internals, Part 6: Bootstrapping and Memory Allocator Initialization

This post is the continuation of our Golang Internals series. It explores the bootstrapping process, which is key to understanding the Go runtime, in more detail. In this part, we will run through the second portion of the starting sequence, learn how arguments are initialized, what functions are called, etc.

The starting sequence

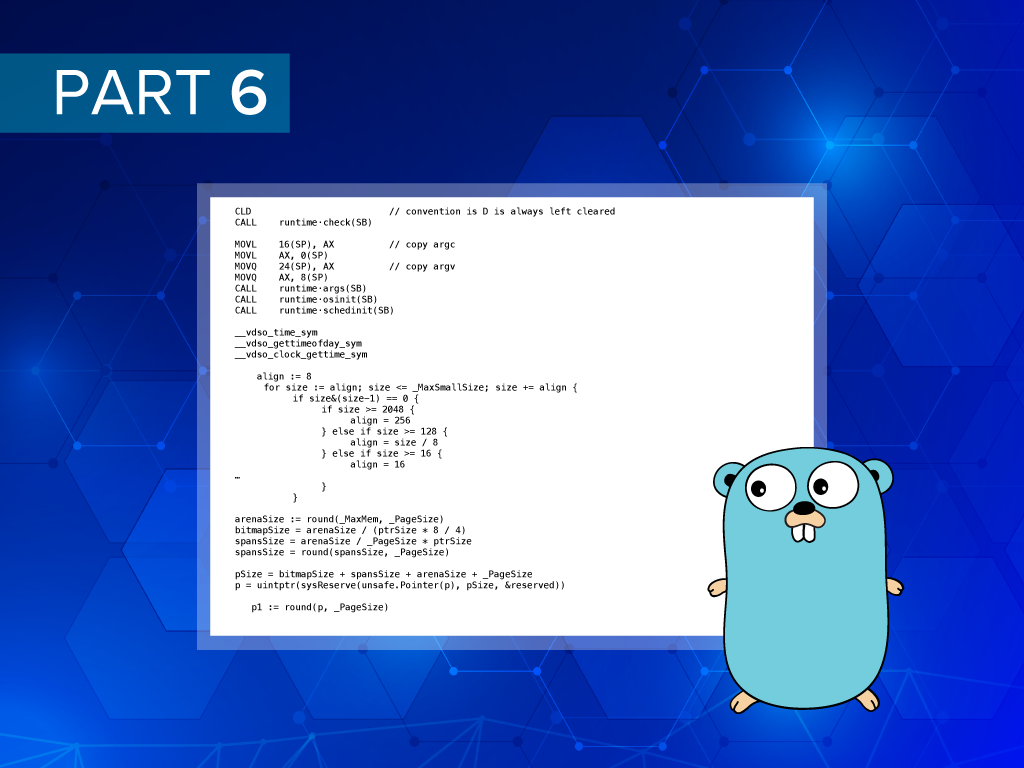

We will pick up our exploration from where we left off last time—the runtime.rt0_go function. There is still a part of it that we haven’t looked at.

CLD // convention is D is always left cleared

CALL runtime·check(SB)

MOVL 16(SP), AX // copy argc

MOVL AX, 0(SP)

MOVQ 24(SP), AX // copy argv

MOVQ AX, 8(SP)

CALL runtime·args(SB)

CALL runtime·osinit(SB)

CALL runtime·schedinit(SB)

The first instruction (CLD) clears the direction flag of the FLAGS register. This flag affects the direction of string processing.

The next function is a call to the runtime.check function, which is also not very valuable for our purposes. The runtime just tries to create instances of all built-in types, check their sizes and some other parameters, etc., and it panics if something goes wrong. You can easily explore this function on your own.

Analyzing arguments

The next function, runtime.Args, is somewhat more interesting. Apart from storing arguments (argc and argv) in static variables, on Linux systems, it is responsible for analyzing the ELF auxiliary vector and initializing syscall addresses.

This requires some explanation. When the operating system loads a program into memory, it initializes the initial stack for this program with some data in a predefined format. At the top of the stack, lay the arguments—pointers to environment variables. At the bottom, we can find the “ELF auxiliary vector,” which is actually an array of records that contains some other useful information, for example, the number and size of program headers. (For more on the ELF auxiliary vector format, please check out this article.

The runtime.Args function is responsible for parsing this vector. Out of all the information that it contains, the runtime only uses startupRandomData, which mainly serves for initializing hashing functions and pointers to locations for some syscalls. The following variables are initialized as shown below.

__vdso_time_sym

__vdso_gettimeofday_sym

__vdso_clock_gettime_sym

They are used for obtaining the current time in different functions. All these variables have default values. This allows Golang to use the vsyscall mechanism to call the corresponding functions.

The runtime.osinit and runtime.schedinit functions

The next function called during the startup sequence is runtime.osinit. On Linux systems, the only thing it does is initialize the ncpu variable that holds the number of CPUs in the system. This is done via a syscall.

runtime.schedinit—the next function in the startup sequence—is more interesting. It begins by obtaining the current goroutine, which is, in fact, a pointer to the g structure. We have talked about how this pointer is stored when discussing the TLS implementation. Next, it calls runtime.raceinit. We will skip the discussion of runtime.raceinit, because this function is normally not called when checking for race conditions is not enabled. After that, some other initialization functions are called.

Let’s explore them one at a time.

Initializing traceback

The runtime.tracebackinit function is responsible for initializing traceback. Traceback is a stack of functions that were called before we got to the current point of execution. For example, we can see it each time a panic occurs. Traceback is generated by a given program counter by calling a function called runtime.gentraceback. For this function to work, we need to know the addresses of some built-in functions (e.g., because we don’t want them to be included into the traceback). runtime.tracebackinit is responsible for initializing these addresses.

Verifying linker symbols

Linker symbols are data emitted by the linker to the executable and the object file. Most of these symbols’ contents have been discussed in Golang Internals, Part 3: The Linker, Object Files, and Relocations. In the runtime package, linker symbols are mapped to the moduledata struct. The runtime.moduledataverify function is responsible for performing some checks against this data and verifying that it has the correct structure and is not corrupted.

Initializing the stack pool

To understand the next initialization step, you need a bit of knowledge about how stack growth is implemented in Go. When a new goroutine is created, a small fixed-size stack is allocated for it. When the stack reaches some threshold, its size is doubled, and the stack is copied to another location.

There is still a lot of detail on how reaching this threshold is determined and how Go adjusts pointers in the stack. We have already touched on some of these things in our previous blog posts, when talking about the stackguard0 field and function metadata. You can also find a lot of useful information on the subject in this document.

Go uses a stack pool to cache currently unused stacks. The stack pool is an array initialized in the runtime.stackinit function. Each item in this array contains a linked list of stacks of the same size.

Another variable initialized at this stage is runtime.stackFreeQueue. It also contains a linked list of stacks, but these are added to the list during garbage collection and are cleared after it is finished. Note that only 2 KB, 4 KB, 8 KB, and 16 KB stacks are cached. Larger ones are allocated directly.

Initializing the memory allocator and size classes

The process of memory allocation is described in this source code comment. We strongly encourage you to read it, if you want to understand how memory allocation works. Initialization of the memory allocator is located in the runtime.mallocinit function, so let’s take a closer look at it.

The first thing we can see here is that runtime.mallocinit is calling another function—initSizes, which is responsible for calculating size classes. But what size does a class have? When allocating a small object (less than 32 KB), the Go runtime first rounds its size up to a predefined class size. So, the allocated block of memory can only have one of the predefined sizes that is usually larger than what is required for the object itself. This leads to a small memory wastage, but it enables you to easily re-use allocated memory blocks for different objects.

The initSizes function is responsible for calculating these classes. At the top of this function, we can see the following code.

align := 8

for size := align; size <= _MaxSmallSize; size += align {

if size&(size-1) == 0 {

if size >= 2048 {

align = 256

} else if size >= 128 {

align = size / 8

} else if size >= 16 {

align = 16

…

}

}

As we can see, the smallest two size classes are 8 and 16 bytes. Subsequent classes are located in every 16 bytes up to 128 bytes. From 128 to 2,048 bytes, classes are located in every size/8 bytes. After 2,048 bytes, size classes are located in every 256 bytes.

The initSizes method initializes the class_to_size array, which converts a class (here, by class we mean its index in the class list) to its size. It also initializes the class_to_allocnpages array that stores data on how many memory pages should be obtained from the OS to fill one object of a given class, and two more arrays—size_to_class8 and size_to_class128. These serve for conversion from object size to a corresponding class index. The first one converts object sizes smaller than 1 KB, and the second one is for object sizes of 1–32 KB.

Virtual memory reservation

The next thing the mallocinit function does is reserve virtual memory for future allocations. Let’s see how this is done on x64 architectures. First of all, we need to initialize the following variables:

arenaSize := round(_MaxMem, _PageSize) bitmapSize = arenaSize / (ptrSize * 8 / 4) spansSize = arenaSize / _PageSize * ptrSize spansSize = round(spansSize, _PageSize)

arenaSizeis the maximum amount of virtual memory that can be reserved for object allocations. On 64-bit architectures, it is equal to 512 GB.bitmapSizecorresponds to the amount of memory reserved for the garbage collector (GC) bitmap. This is a special memory type used to show where exactly pointers are located in memory and whether the object, which is pointed to, is marked by GC.spansSizeis the amount of memory reserved for storing an array of pointers to all memory spans. A memory span is a structure that wraps a block of memory used for object allocations.

Once all these variables have been calculated, the actual reservation is done.

pSize = bitmapSize + spansSize + arenaSize + _PageSize p = uintptr(sysReserve(unsafe.Pointer(p), pSize, &reserved))

Finally, we can initialize the mheap global variable that is used as central storage for all memory-related objects.

p1 := round(p, _PageSize)

mheap_.spans = (**mspan)(unsafe.Pointer(p1))

mheap_.bitmap = p1 + spansSize

mheap_.arena_start = p1 + (spansSize + bitmapSize)

mheap_.arena_used = mheap_.arena_start

mheap_.arena_end = p + pSize

mheap_.arena_reserved = reserved

Note that, from the beginning, mheap_.arena_used is initialized with the same address as mheap_.arena_start, because nothing has been allocated yet.

Initializing the heap

Next, the mHeap_Init function is called. The first thing that is done here is allocator initialization.

fixAlloc_Init(&h.spanalloc, unsafe.Sizeof(mspan{}), recordspan, unsafe.Pointer(h), &memstats.mspan_sys)

fixAlloc_Init(&h.cachealloc, unsafe.Sizeof(mcache{}), nil, nil, &memstats.mcache_sys)

fixAlloc_Init(&h.specialfinalizeralloc, unsafe.Sizeof(specialfinalizer{}), nil, nil, &memstats.other_sys)

fixAlloc_Init(&h.specialprofilealloc, unsafe.Sizeof(specialprofile{}), nil, nil, &memstats.other_sys)

To better understand what an allocator is, let’s see how it is utilized. All allocators operate in the fixAlloc_Alloc function, called each time we want to allocate new mspan, mcache, specialfinalizer, and specialprofile structs. The main part of this function is as follows below.

if uintptr(f.nchunk) < f.size {

f.chunk = (*uint8)(persistentalloc(_FixAllocChunk, 0, f.stat))

f.nchunk = _FixAllocChunk

}

It allocates memory, but instead of allocating the actual size of the structure—f.size bytes—we set aside _FixAllocChunk bytes (currently, equal to 16 KB). The rest of the available space is stored in the allocator. Next time, we need to allocate a structure of the same type, it will not require calling persistentalloc, which can be time consuming.

The persistentalloc function is responsible for allocating memory that should not be garbage collected. Its workflow is as follows.

- If the allocated block is larger than 64 KB, it is allocated directly from OS memory.

- Otherwise, we first need to find a persistent allocator:

- A persistent allocator is attached to each processor. This is done to avoid using locks when working with a persistent allocator. So, we try to use a persistent allocator from the current processor.

- If we cannot obtain information about the current processor, a global system allocator is used.

- If the allocator does not have enough free memory in its cache, we set aside more memory from the OS.

- The required amount of memory is returned from the allocator’s cache.

The persistentalloc and fixAlloc_Alloc functions work in similar ways. It is possible to say that those functions implement two levels of caching. You should also be aware that persistentalloc is used not only in fixAlloc_Alloc, but also in many other places where we need to allocate persistent memory.

Let’s return to the mHeap_Init function. One more important question to answer here is how the four structures, for which allocators were initialized at the beginning of this function, are used:

mspanis a wrapper for a memory block that should be garbage collected. We have talked about it when discussing size classes. A newmspanis created when we need to allocate a new object of a particular size class.mcacheis a struct attached to each processor. It is responsible for caching spans. The reason for having a separate cache for each processor is to avoid locking.specialfinalizerallocis a struct that is allocated when theruntime.SetFinalizerfunction is called. This can be done if we want the system to execute some cleanup code when an object is cleared. A good example is theos.NewFilefunction that associates a finalizer with each new file. This finalizer should close the OS file descriptor.specialprofileallocis a struct employed in the memory profiler.

After initializing memory allocators, the mHeap_Init function initializes lists by calling mSpanList_Init, which is very simple. All it does is initialize the first entry for the linked list. The mheap struct contains a few such linked lists.

mheap.freeandmheap.busyare arrays that contain free and busy lists with spans for large objects (larger than 32 KB, but smaller than 1 MB). Each of these arrays contains one item per each possible size. Here, sizes are measured in pages. One page is equal to 32 KB. The first item contains a list with 32 KB spans, the second one contains a list with 64 KB spans, and so on.mheap.freelargeandmheap.busylargeare free and busy lists for objects larger than 1 MB.

The next step is to initialize mheap.central, which stores spans for small objects (less than 32 KB). In mheap.central, lists are grouped accordingly to their size classes. Initialization is very similar to what we have seen previously. It is simply initialization of linked lists for each free list.

Initializing the cache

Now, we are almost done with memory allocator initialization. The last thing that is left in the mallocinit function is mcache initialization.

_g_ := getg() _g_.m.mcache = allocmcache()

Here, we first obtain the current goroutine. Each goroutine contains a link to the m struct, which is a wrapper around the operating system thread. Inside this struct, there is the mcache field that is initialized in these lines. The allocmcache function calls fixAlloc_Alloc to initialize a new mcache struct. We have already discussed how allocation is done and the meaning of this struct (see above).

A careful reader may notice that we have previously said mcache is attached to each processor, but now we see that it is attached to the m struct, which corresponds to an OS process, not a processor. And that is correct—mcache is initialized only for those threads that are currently executed and it is relocated to another thread whenever a process switch occurs.

In the next post, we will continue discussing the bootstrap process by looking at how the garbage collector is initialized and how the main goroutine is started. Meanwhile, don’t hesitate to share your thoughts and suggestions in the comments below.

Further reading

- Golang Internals, Part 1: Main Concepts and Project Structure

- Golang Internals, Part 2: Diving Into the Go Compiler

- Golang Internals, Part 3: The Linker, Object Files, and Relocations

- Golang Internals, Part 4: Object Files and Function Metadata

- Golang Internals, Part 5: the Runtime Bootstrap Process