Hadoop + GPU: Boost Performance of Your Big Data Project by 50x–200x?

Learn about possible bottlenecks when offloading Hadoop calculations from a CPU to a GPU and what libraries/frameworks to use. Register to view the contents

Accelerate your project

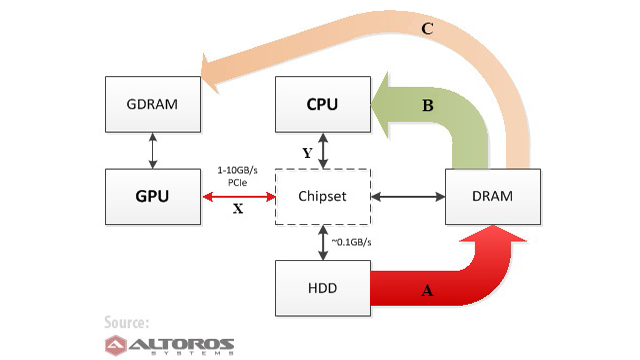

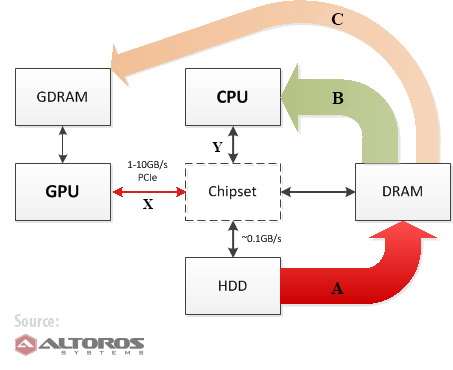

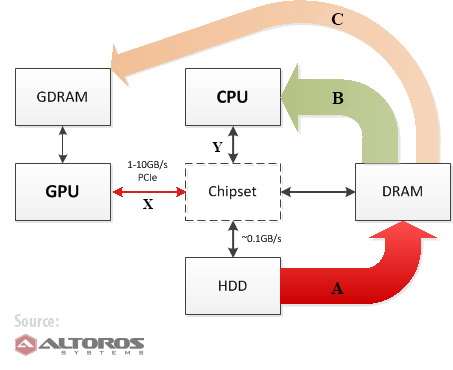

Hadoop, an open-source framework that enables distributed computing, has changed the way we deal with big data. Parallel processing with this set of tools can improve performance several times over. The question is, can we make it work even faster? What about offloading calculations from a CPU to a graphics processing unit (GPU) designed to perform complex 3D and mathematical tasks? In theory, if the process is optimized for parallel computing, a GPU could perform calculations 50–100 times faster than a CPU.

Read this article at NetworkWorld to find out what is possible, and how you can try this for your large-scale system.

Check out this white paper to explore the idea in detail.

Further reading

Vladimir Starostenkov is a Senior R&D Engineer at Altoros. He is focused on implementing complex software architectures, including data-intensive systems and Hadoop-driven apps. Having background in computer science, Vladimir is passionate about artificial intelligence and machine learning algorithms. His NoSQL and Hadoop studies were published in NetworkWorld, CIO.com, and other industry media.

Read More

1872

Couchbase Capella vs. MongoDB Atlas vs. Amazon DynamoDB vs. Redis Enterprise Cloud

Read More

3936

Top 7 Challenges of Product Traceability in Manufacturing Supply Chain

Read More

1792

NoSQL DBaaS Comparison 2022: Couchbase Capella vs. MongoDB Atlas